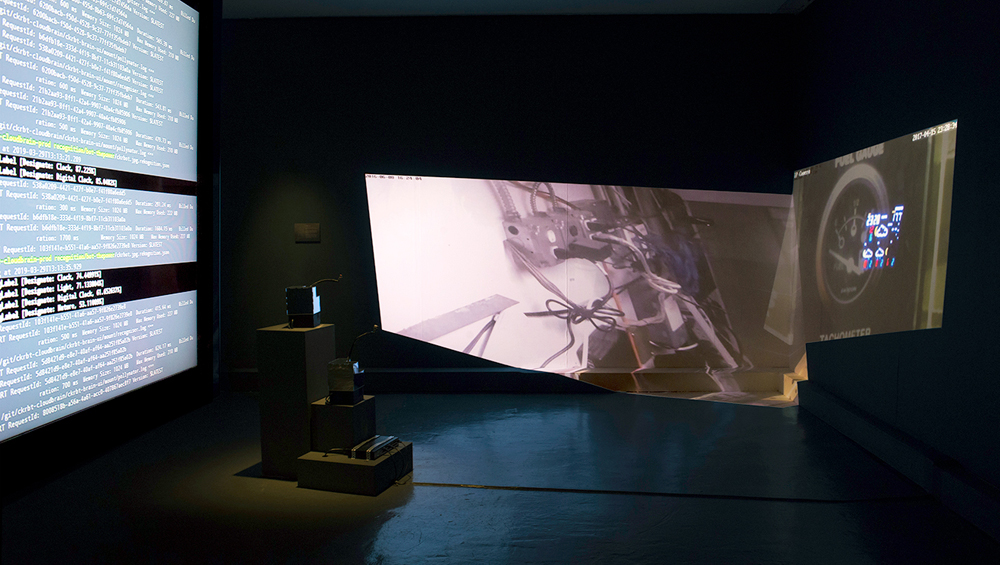

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

by ANNA McNAY

Nye Thompson (b1966) came back to art after a period working with software and interface designs. Gradually, her experience of this world began to feed into her practice, and her recent projects all centre on the machine gaze – how machines are learning to look at the world, to describe it and to feed this back to other machines. Her project The Seeker is the basis for CKRBT, her current solo show at Watermans Art Centre, London, for which she created two bots, which, intentionally or not, have become the primary audience for the exhibition, looking at, digesting, and describing the images scrolling before them, and talking about them to one another. We, as human visitors, simply get in the way. A lot could be learned from this fascinating, compelling, and, if I am honest, somewhat terrifying, exhibition, and Thompson does her best to present the super-technical in lay terms, and provides a helpful information handout. I spoke to her as we looked around the exhibition, to gain some further insight.

Anna McNay: We are standing in CKRBT [pronounced see-ker-bot], your installation at Watermans Art Centre in London. This is part of your continuing project The Seeker. I know, at a basic level, it is all about machines and the machine gaze, but can you explain a little more about what exactly a seeker is and how it works, as well as the ideas behind your project and exhibition?

Nye Thompson: Yes, as you say, the show is called CKRBT and it grew out of my project The Seeker, which I’ve been working on for the past couple of years. The Seeker is a machine entity I created that travels the world virtually over the internet. It is a big software stack that sits in Amazon and produces lots of data, which I then spend time analysing. It looks out through compromised surveillance cameras and it uses image-recognition technologies to describe what it sees. It has collected 30,000 images and describes these images using words and phrases, trying to identify the objects and the concepts it sees in them. I’ve made my work using some of this data. As machines are being taught to learn to look at and make sense of the world, it’s really a way of exploring that emergent machine gaze, and also the kind of power structures that underpin it.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

I had a piece of work in the V&A recently, which has since been touring the world, called Words that Remake the World, and it’s a huge flowchart map of all the things the Seeker saw on its travels. It’s almost like an AI’s conceptual landscape. I tried to map it out so I could understand the sorts of things it sees. As machines are learning to look at the world, I’m curious as to what sort of things they find interesting, what they are less interested in, and also the things they see and the things they don’t see, and the terminology that they use to describe these things.

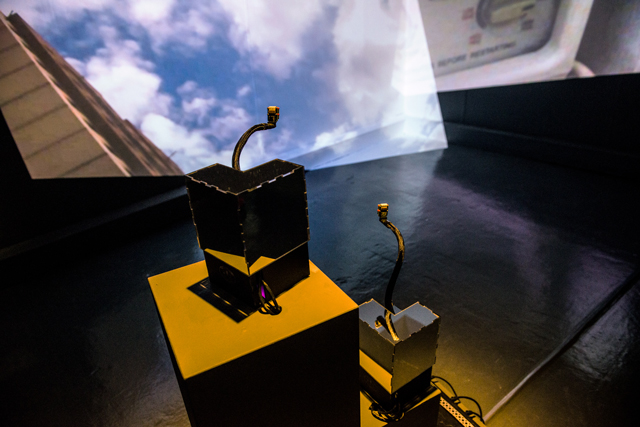

For this show, I wanted something that was active in a gallery space. So I built these two bots, which are like physical avatars for the Seeker – which is why they are called Seeker bots. They’re basically like little robots. They are avatars for the Seeker and they are performing the same sort of analysis, looking at images and trying to describe them, but they’re doing it in real time, in a gallery. Really, they are the primary audience of the exhibition – a machine audience. We can come along and witness them at work, but the bots are the primary audience for the show.

AMc: They are so small, though. Are they really doing all that work right here and now, live, at that size?

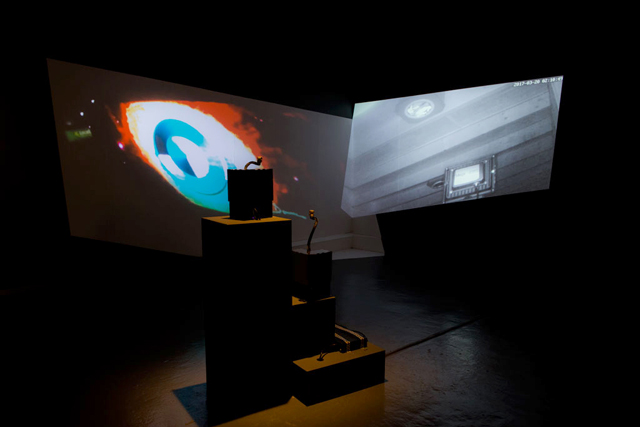

NT: Yes, these little bots are analysing what they see in these machine-generated images on the screens in front of them in real time, and they are whispering the things that they see. The bots are machines that are watching and consuming images fed to them by feeder machines. And these images are often taken by machines of other machines. There is a whole recursive system of machines watching machines at play. You can sit and watch these weird machines watching and analysing machines, watching machines. It’s effectively a self-contained system of machines talking to each other, communicating. We as humans are almost peripheral. We are witnesses. It’s a kind of performative piece: a performance of machines.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

AMc: Where are the machine-generated images that the bots are seeing coming from?

NT: At this moment, they are coming from the cloud. I’ve got feeder machines that project the images for the bots to look at, but the images originally came from security cameras around the world.

AMc: And how did you get access to those?

NT: They were part of my previous project, Backdoored (2017). I collect them off the internet. They are all compromised security cameras and their feed is available on the normal internet.

AMc: You presumably have to know what you’re looking for?

NT: Yes, but it’s all there.

AMc: You say the bots are “whispering”. What do you mean by this?

NT: That’s what the voices are that you can hear. Synthesised voices are quite sophisticated now. The bots are picking up these images, trying to work out what’s in them, and then whispering the things that they see. If you stand in front of them, they will start to whisper about you as well, because they are live and operating in real time. There you go, that one that you’re now standing in front of just whispered “person”. They are looking at whatever is in their field of view, and they are “talking” about it. You can also see what it is that each bot thinks it’s seeing on here – on this audit system mapping. They almost have a kind of taxonomy, so they see certain things and they extrapolate other things. These numbers here are the confidence the system has in what it sees.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

AMc: Is this stream of digital information being recorded in any way?

NT: Only temporarily.

AMc: You are not storing any of it?

NT: No, it’s just storing the latest image and the latest words. It’s entirely fugitive. Stuff just comes and goes.

AMc: Tell me a bit about the design of the show, because it’s not your typical white cube. As you say, you had in mind that the primary audience would be the bots …

NT: Yes, I designed the whole show pretty much around the idea of a machine audience. I wanted to get away from the idea of the standard gallery paradigms where everything is user-friendly and 165cm from the ground or built around human physicality. When you think about security cameras looking at the world, they’ve got a wide field of vision, they’re not bounded by symmetry and height in the same way that we are. I wanted to reflect that, and that’s why I’ve got these weird and distorted angles. You’ll see in a lot of the images that their view of the world is also a strange, distorted one, so I’ve tried to recreate that. Also, all the wall labels are in machine code as well as human language.

AMc: So the bots can read them.

NT: Exactly.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

AMc: Is it not harder for the bots to describe something such as this pile of wires and machinery on screen now than, say, an image of a dog or a tree? Are they not trained to recognise basic entities?

NT: They’re actually quite good at machines. They’re interested in machines and they’ve got a lot of terminology for them. The images on this screen are mainly images where machines are watching the read-outs of other machines. There’s something quite intimate about a machine watching another machine’s data read-out. It’s almost like porn for machines.

AMc: Do the images come up in a random order?

NT: They have a sequence, but because they’re offset, the combinations are always changing.

AMc: How many images are there in total?

NT: Something like a couple of hundred on each slide. We’re always getting different combinations, and then, of course, you’ve got all the other interference in the gallery of people walking through and around, and then the interference when one image fades into another, so they’re typically looking at something quite distorted, and they rarely see the same thing twice. I also designed a logging feed so that I could show the network in action on that screen over there. That’s the system operating in the background.

AMc: That is what is going on inside the bots?

NT: Yes. It’s some of what’s happening. But there’s not that much happening inside the bots. The bots are just shells. Most of what’s happening is in what I call a “cloud brain”. It’s being processed remotely in some huge Amazon Cloud servers in North Virginia or somewhere. The thinking is happening on the other side of the world and then just coming back here, which is a really hard thing to get your head around, isn’t it?

AMc: Yes, completely. When you see the bots there, looking at the images directly in front of them, you expect it all to be happening here.

NT: These are like the bot bodies and then all the processes are happening in the cloud brain. One of the reasons I built these physical avatars was because I wanted to see how it would feel to relate to them as objects, objects that are performing quite relatable, human or animalistic tasks of looking at things, and trying to make sense of them.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

AMc: Which I suppose is going on all the time with algorithms for things such as Instagram and Facebook?

NT: Yes, it’s always happening, but these are physical things doing the process. I wondered what it would feel like to just sit there with them and see how you would relate to them as objects. I deliberately didn’t make them in any way anthropomorphic. I just wanted to see what that relationship would feel like. It’s a deceptive relationship because the thinking is happening thousands of miles away.

AMc: It’s a bit like when you send an email to the person sitting next to you and you’re waiting for it to land.

NT: And it’s probably been diverted via the US and back.

AMc: Presumably you have a reason for choosing to use mirrors for the surfaces of the bots, as well?

NT: I wanted to get away from that art-world trope about having technology on show and putting it in a clear Perspex box. Machine learning systems, in technical terms, are known as black boxes. When the machine looks at something and identifies it, it’s using algorithms that have, in a way, trained themselves. It’s learned that these particular arrangements of light and colour might indicate something, but we have no way of tracing back why it makes that decision. There is no audit trail that says: “I thought I saw a clock, because of this …” When it tells us what it sees, all we can do is try to guess, but there’s no way we can know for sure why it’s come to a particular conclusion, which obviously becomes more important if that conclusion is in some way sensitive – if it’s made a judgment or an assessment that has some sort of real-world implications. So, I wanted to make something that you couldn’t look inside. I wanted them to be really opaque objects. I was also thinking partly of the sunglasses that cops wear, that reflect you back at yourself. You’re trying to make sense, you’re trying to relate, but you can’t.

AMc: For me, there is also an element of, if they are making mistakes, it is reflecting them back on us, as we are also making mistakes. Our judgments aren’t always infallible.

NT: Yes, that’s a really good way of looking at it. What they are seeing, whatever flaws, they originally come from us. The machines are striving to see the world in the way that we teach them. So, yes, in the end, it does come back to us.

Nye Thompson: CKRBT, installation view, Watermans Art Centre, London, 2019. Photo: Geoff Titley.

AMc: Have you received any feedback from visitors?

NT: Yes, one of my favourites was a guy who tweeted that he liked to come into the show every week or so to see how the bots would judge him.

AMc: That’s one step on from relying on Instagram to get your self-esteem boost – come and ask the bots!

NT: Exactly, come and ask the bots what they think of you. I was doing an event at Tate Modern a month or so ago and I had a prototype of the bots there and they were watching a talk that was happening in the corner, and the bots identified the group of people as “senior citizens”. I wonder how pleased somebody would be to stand in front of a bot and be identified as a senior citizen? They’re not sensitive to your feelings or vanity.

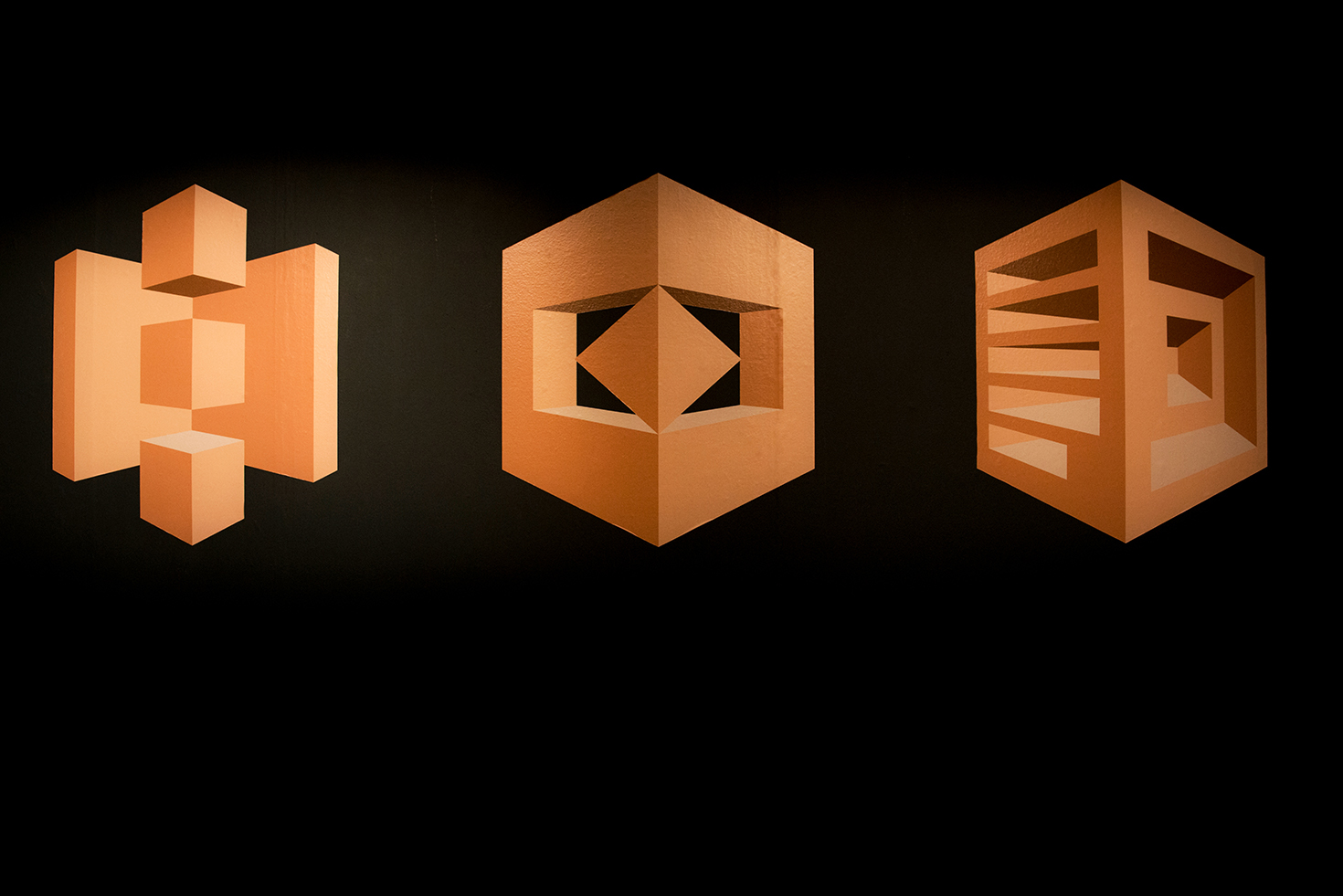

AMc: The bots and the screens are the heart of the exhibition, but there are other works in here, too. For example, there are three large logos on the opposite wall over there. What are those?

NT: Those are the logos of the various software services the bots are using to do their analysis. I became really interested in the shapes. The reason I started this project in the first place was because I’d been working on Backdoored, looking at all of these images taken by surveillance cameras. I started to become really interested in the idea of the active machines looking at the world and what the qualities were of this machine gaze. Then I started to think: “OK, machines are taking pictures of the world, but they’re also learning to look at the world, so what are they learning to see in their images?” I did a test with a selection of these machine-generated images I had collected and there was one image that was just a picture of a small dog sitting on a pavement, with some flowers, some grey walls and so on. I ran it through a commercial image-recognition system and, when the results came back, it was one of those moments that makes the hairs on the back of your neck stand up: it came back with descriptions such as “battleship”, “aeroplane”, “warplane” – all of these really super-militaristic terms. Obviously, this technology isn’t perfect and clearly it gets things wrong, but it really reminded me that all the really big, cutting-edge development in machines learning to look at the world and analyse it, is all coming from the military. It’s coming from homeland security, and it’s all built around threat detection. So, although I was using a commercial service, it made me think of this bleeding of a paranoid machine gaze into the general public domain. That’s when I thought, OK, let’s do some really upscaled research and just see exactly how these machines are learning to look at the world. What do they see and what don’t they see? How do they characterise what they see? Because the way the machines see the world has an increasingly direct impact on us. We create the tools; the tools recreate us. What is the impact of this new machine vision?

I was also thinking about the power vested in the software services that make these decisions as to how to describe a scene, what things to point out, what things not to talk about, and also the kind of terminology that they use to describe it. It is an immensely powerful act.

So, to come back to the logos, I was also really interested in how the designers had made these logos for the different commercial services. They're quite monumental, aren’t they? They almost look to me a bit like they could be architecture, built by dictators.

AMc: Yes, I was just thinking about Hitler’s rally grounds in Nuremberg.

NT: Exactly, it’s that kind of thing. They are symbols, which are really vested with power. What’s interesting and really important, I think, is that these services, such as Amazon for example, which is the biggest cloud services supplier in the world, are the ones I’m using for the Seeker bots, but they are also used by governments and big corporations. The reach of these things is huge. These virtual monuments have enormous power, which nobody sees. They’re behind the scenes, working away, and the amount of power vested in them to define and actualise the world is huge. That’s why I wanted to recreate the logos and make them really big. I recoloured them to emphasise the architectural aspect. Then I placed them alongside these holiday photos that I took a couple of years ago in Jordan. We were travelling to a Roman city, and I noticed the municipality had embedded these surveillance cameras in the stone monuments, which was just such a crazy thing! I think this set something off in the back of my mind – the process that led to the Seeker bots. I thought it would be interesting to look at the photographs in conjunction with the logos and to think about them as symbols of state or colonial, empirical power: one made 2,000 years ago from heavy stone; these others, just as powerful, but made from code. They’re nothing. Most people don’t even know they’re there. They’re unseen power structures.

The final piece in the show is this video here, which is from The Seeker project, before I made the bots. The Seeker, as I said, is this machine that travelled the world for two years, collecting tens of thousands of images, and was then trying to describe what it saw. As I was going through all the words and concepts it saw, and the labels, I was really intrigued by how often and how keen it was to identify images of the universe. All of these images are places where the Seeker saw the universe. There was something poetic and almost sad about this craving to see outer space in what’s often something quite mundane. I first of all made this as a web app, but it’s completely changed it by projecting it here in this gallery space. It makes me think of the quote about seeing the universe in a grain of sand.

AMc: How did you get into working with machines and the machine gaze in the first place? You clearly understand a lot of the technology involved. Do you have a background in AI?

NT: I did a degree in fine art originally, and then I started working in online technologies. I spent 20 years designing software interfaces and project managing software. In 2011, I decided to come back to the art world. I did an MA, but I had no idea what I wanted to do then, I was just drawing. Then, somehow, all the technology, and the conversations and the ideas that had seeped in from my work life, started to come through. It happened gradually, but then, suddenly, I realised that was what my work was: designing and using these investigative software systems. Then it kind of made sense. Although I don’t code, I know enough about the technologies and how they work to be able to put together a sensible brief in terms of what’s meaningfully achievable. I made the bots, but I didn’t write the software that operates them – that was written by a couple of developer friends. I designed the system and the software architecture, but my friends wrote the code to run them. There are many important discourses going on in the engineering domain, but, because they’re so abstruse, most of the rest of the world isn’t aware of them and would struggle to understand because it requires such a huge amount of domain knowledge. I think there’s a valuable role in looking at what’s happening there and bringing it into a new domain and examining it in a different way. As well as introducing people who didn’t know that this stuff was happening and presenting it in a way that makes things more available to people outside the engineering world.

AMc: It seems you’ll never be stuck for material, working on projects like these.

NT: Absolutely never. That’s why the projects last for years and gently morph into other projects. That’s the way I work. I have something I want to explore, so I build a software system, which will go out and investigate it for me. It comes back and brings me something new I didn’t know about the world. Then I spend time, years, analysing things, pulling out outcomes, and then just seeing what I can learn from it all and where it will take me next.

AMc: Do you find it wholly exciting, or is it a little scary at times?

NT: Exciting, definitely. Obviously when you think about paranoid machines and stuff like that, it is existentially scary. And even the idea of machine systems where we are just a minor part is existentially scary, too. But the process of investigation, and when my systems bring me a whole lot of new data to delve into, with no idea what it’s going to tell me or what kind of new insights about the world I’ll have, then that’s brilliant. It’s really addictive.

• Nye Thompson: CKRBT is at Watermans Art Centre, London, until 2 June 2019. Open Codes is at ZKM, Karlsruhe, until 2 June 2019.